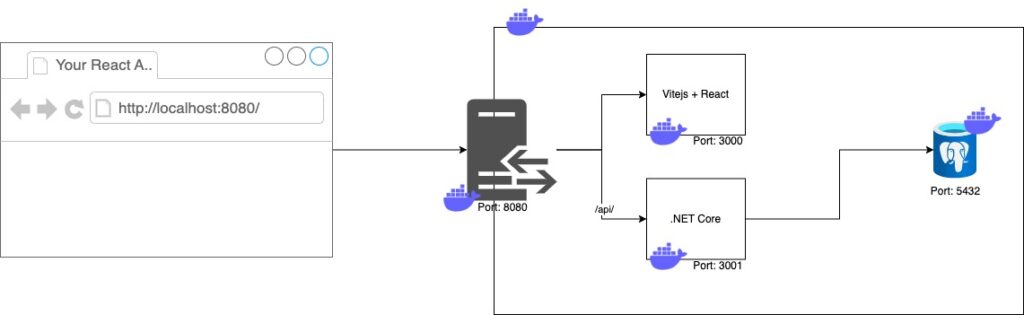

I wanted to write a short article on how to get all four components working so you don’t need to install a bunch of libraries on your machine. The reason I’m doing this is so that I can work on my frontend, backend, and SQL independently. The Nginx acts as a reverse proxy for my services.

When I was using my Google Foo to find the solution, it seemed like there was no single place where the answer lay. There were explanations for all sorts of different scenarios but not like mine. As well, I also did some updates over time (and switched technology i.e. NodeJS to ViteJS) which broke things (like ports and mappings).

One of my requirements was that the front end also recompile every change so I don’t need to restart the Docker engine. I also want to do this for the back end, but I haven’t invested the time yet. The other requirement that I had was that I wanted separate repositories for frontend, backend, and proxy. There is no practical reason behind this other than my wanting some clean system design.

ViteJS + React

Firstly, this is a really simple setup. We first just take NodeJS from Docker, change the working directory, copy the package.json, install the packages, and then copy everything, exposing the public port from ViteJS and finally running our dev server.

FROM node:18-alpine WORKDIR /app COPY package.json . RUN yarn install COPY . . EXPOSE 3000 CMD [ "yarn", "dev" ]

What you may notice is that this won’t mount the local drive. So if you run this docker, you’ll need to restart to compile any changes. The fun comes when we use our docker-compose script.

.NET Core

Again, we have a simple script that’s pretty much derived from the examples online. First, we build using the build environment and then we run using the runtime environment. In this scenario, the port we want to expose is port 8080 (depending on your .NET config/version). Most likely, because we are publishing our app, it will be the default.

# Learn about building .NET container images: # https://github.com/dotnet/dotnet-docker/blob/main/samples/README.md FROM mcr.microsoft.com/dotnet/sdk:8.0-alpine AS build WORKDIR /source # copy everything COPY . . RUN dotnet restore --use-current-runtime # copy everything else and build app RUN dotnet publish --use-current-runtime --self-contained false --no-restore -o /app # final stage/image FROM mcr.microsoft.com/dotnet/aspnet:8.0-alpine EXPOSE 8080 WORKDIR /app COPY --from=build /app . RUN ls -al ENTRYPOINT ["dotnet", "Your.Library.dll"]

Again, you can run this locally. It should have your .NET Core app up and running with no problems. But will they be playing nice? And how to make it like a production setup whereby you want to have the same address but a different path. That’s where the nginx comes in.

Nginx

For nginx docker, you don’t need to do much. Just copy over the config file.

FROM nginx COPY nginx.conf /etc/nginx/conf.d/default.conf

How does that config file look?

server {

listen 80;

server_name localhost;

location /api/ {

proxy_pass http://api:8080/;

}

location / {

proxy_pass http://app:3000;

}

}

Again, you can run this locally but it won’t do anything yet. That’s where we need our magic docker-compose file.

version: '1.0'

services:

api:

build:

context: ../Path/to/.net/app/.

dockerfile: Dockerfile

ports:

- 3001:8080

app:

build:

context: ../Path/to/react/app/.

dockerfile: Dockerfile

ports:

- 3000:3000

volumes:

- ../path/to/react/app:/app

- /app/node_modules/

proxy:

build:

context: .

dockerfile: Dockerfile

ports:

- 8080:80

depends_on:

- app

- api

db:

image: postgres:15.7

restart: unless-stopped

volumes:

- ~/apps/postgres:/var/lib/postgresql/data

environment:

POSTGRES_PASSWORD: postgres

POSTGRES_USER: postgres

ports:

- '5432:5432'

Ok, so let’s look at what’s going on in here as it’s a little complex. Firstly, with docker-compose, we can see there are several services. The first service is what I’ve called the API, or the .NET Core app. According to our nginx.conf, this is mapped to /api/ and port 8080. What I found out was that you need to use the internal ports when accessing the Docker network from a service within. The external ports can be used by your browser or headless rest client to access.

api:

build:

context: ../Path/to/.net/app/.

dockerfile: Dockerfile

ports:

- 3001:8080

Digging deeper into the API, we can see the port I expose is 3001 (as per my diagram) and it’s mapped to port 8080 (the default .NET Core port that’s listening). For how to build the Docker image, we’ve given some context like where the path is and what the docker file is (in case you have different production/dev docker files).

app:

build:

context: ../Path/to/react/app/.

dockerfile: Dockerfile

ports:

- 3000:3000

volumes:

- ../path/to/react/app:/app

- /app/node_modules/

Next is the app, or the React + ViteJS application. Again, we give it some context of how to build it and where the docker file is. We also give it a port, this time I’ve just mapped it to the same port (3000). The only difference here is the volumes. These allow you to mount local directories/paths to directories/paths inside of the docker instance. In this case, we have mounted our local path to the old app path. But we are not loading the node_modules again. This allows for ViteJS and/or any other framework to do the hot reloading on changes.

proxy:

build:

context: .

dockerfile: Dockerfile

ports:

- 8080:80

depends_on:

- app

- api

Here you can see the standard context on how to build the docker file for this service. You can see we expose port 8080 as our public port for the reverse proxy. Lastly, I’ve added depends_on as these are the two services that this reverse proxy needs to run.

I’m not going to go into Postgres as that’s pretty much the default setup with some username/password combo you should not use for production.

And that’s it. All you need to do is run docker compose up --build and it will do the heavy lifting for you. No need to install additional .NET libraries or JS libraries if you are just developing on one side of the project.

Leave a Reply